Free Email Marketing Comprehensive Guide to A/B Testing in Emails

Introduction

A. What is A/B Testing in Email Marketing?

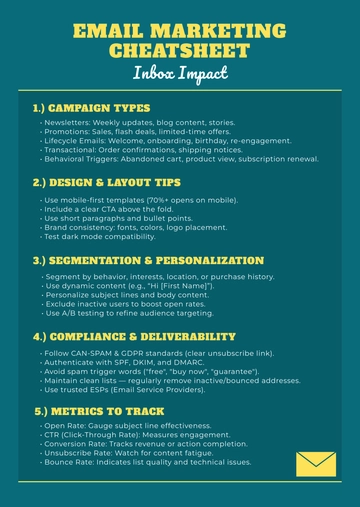

A/B testing, often referred to as split testing, is a fundamental technique in email marketing that involves comparing two different versions of an email campaign, labeled as 'A' and 'B.' The primary purpose of A/B testing is to analyze the performance of these variations and determine which one yields superior results. This method helps email marketers make data-driven decisions, refine their email content, and optimize their campaigns for improved engagement and conversions.

A/B testing typically involves testing specific elements within an email, such as subject lines, email content, call-to-action buttons, images, and more. By comparing these elements, marketers can gain insights into what resonates most with their audience and refine their email marketing strategies accordingly. The beauty of A/B testing lies in its ability to provide empirical evidence to support or refute assumptions, helping marketers fine-tune their email campaigns for maximum impact.

B. Why A/B Testing Matters

A/B testing is not just a valuable tool in the email marketer's toolkit; it's an essential practice. Its significance can be summarized through several key points:

Data-Driven Decision Making: A/B testing eliminates guesswork. Instead of relying on hunches or assumptions about what will work best in an email campaign, it allows marketers to make decisions based on concrete data and evidence.

Improved Engagement: By testing different elements, email marketers can determine which variants resonate most with their audience. This leads to higher open rates and click-through rates, as subscribers receive content that is more relevant and appealing to them.

Increased Conversions: A/B testing extends beyond just opens and clicks. It enables marketers to refine the entire user journey, from the email to the landing page. Optimizing elements like calls-to-action can significantly boost conversion rates.

Enhanced Customer Understanding: Through A/B testing, marketers gain a deeper understanding of their audience's preferences and behaviors. This knowledge can inform future campaigns and even product development.

Continuous Improvement: A/B testing isn't a one-time endeavor. It's an ongoing process that allows marketers to refine and adapt their strategies over time. This iterative approach leads to continually improving email marketing performance.

In summary, A/B testing is a cornerstone of successful email marketing. It empowers marketers to make informed decisions, tailor their messages to their audience, and continually refine their strategies for better results.

Setting Up A/B Tests

A. Define Your Objectives

Before you embark on A/B testing in your email marketing campaigns, it's essential to establish clear objectives. Your objectives should align with your overall marketing goals. Common objectives include:

Increasing Open Rates: If your primary concern is getting more subscribers to open your emails, your A/B tests should focus on elements like subject lines and sender names.

Boosting Click-Through Rates: When your goal is to drive more traffic to your website or landing page, testing elements like calls-to-action and email content is critical

Improving Conversion Rates: If your ultimate aim is to convert email recipients into customers, A/B testing should focus on the entire user journey, from email content to the landing page experience.

Reducing Unsubscribes: To retain your subscribers and reduce churn, consider testing the frequency and timing of your emails.

Clear objectives provide direction for your A/B testing efforts, ensuring that you're testing elements that directly impact your desired outcomes.

B. Segment Your Email List

Email list segmentation is a powerful technique that involves categorizing your subscribers into distinct groups based on various criteria, such as demographics, behavior, or purchase history. Segmenting your email list is critical for effective A/B testing because it allows you to target specific groups with relevant content.

Segmentation offers the following advantages:

Relevance: Subscribers in each segment are more likely to find your emails relevant, which can lead to higher engagement.

Personalization: Segment-specific A/B tests can result in highly personalized content, further increasing the chances of a positive response.

Comparative Testing: By testing different elements with segmented groups, you can understand how specific segments respond to variations, helping you tailor future campaigns.

Behavioral Insights: Segmenting based on user behavior can reveal valuable insights about the preferences and actions of different customer groups.

Designing A/B Test Campaigns

A. Creating A/B Test Variations

When designing A/B test campaigns, it's essential to create meaningful and well-thought-out variations for your email content. These variations are what you will test against each other to determine which one performs better. Here's how to go about it:

Subject Lines: Craft two different subject lines—one for version A and another for version B. Ensure that they are concise, attention-grabbing, and reflect the content of the email.

Email Content: Experiment with different email content. This can include variations in the main message, imagery, and the placement of your call-to-action (CTA) elements. Ensure that the content aligns with your objectives.

Calls-to-Action: If your goal is to boost click-through rates or conversions, test different CTAs. This can involve variations in wording, color, placement, and design.

Images: If your emails include images, test different visuals or the absence of images. Ensure that the images you use are relevant to the message you're conveying.

B. Choosing A/B Test Tools

Selecting the right tools for conducting A/B tests is crucial for a seamless and effective testing process. Here are some popular tools you can consider:

A/B Testing Tool | Description |

HubSpot's A/B Testing Kit | This tool offers a user-friendly interface and comprehensive testing capabilities. |

Google Optimize | Known for its advanced features, this tool allows for in-depth testing of various email elements. |

Freshmarketer | A reliable and cost-effective option, perfect for businesses looking to get started with A/B testing. |

Each of these tools comes with its own set of features and pricing, so it's essential to choose one that aligns with your specific needs and budget.

C. Setting Up Control and Test Groups

Creating control and test groups is a critical step in the A/B testing process. These groups allow you to conduct a controlled experiment, ensuring that your results are meaningful and reliable.

Control Group: This group receives the existing or standard version of your email (Version A). It serves as a baseline against which you'll compare the performance of your test group.

Test Group: This group receives the new or experimental version of your email (Version B). It's where you implement the changes or variations you want to test.

It's essential to ensure that the control and test groups are randomly assigned to minimize bias. Randomization helps ensure that any differences in performance between the two versions are due to the changes you've made, not external factors.

D. Randomizing Your Test Groups

Randomization is crucial in A/B testing to ensure that your results are not influenced by external factors. It helps distribute any potential bias evenly across both the control and test groups. Here's how you can implement randomization effectively:

Use A Testing Tool: Most A/B testing tools have built-in randomization features, so the allocation of subscribers to either group is automated and unbiased.

Random Sampling: If you're manually dividing your subscribers, use random sampling techniques to ensure that each subscriber has an equal chance of ending up in either the control or test group.

Avoiding Biases: Be cautious about unintentional biases. For example, if you're testing a time-sensitive promotion, ensure that the randomization process accounts for time zones to avoid skewing results.

Proper randomization ensures that your A/B tests are statistically valid and that the results accurately represent the impact of the changes you've made.

Running A/B Tests

A. Scheduling Your A/B Tests

The timing of your A/B tests can significantly impact the results. Consider the following scheduling factors:

Timing of Email Sends: Take into account the best days and times for your audience. For instance, B2B emails may perform better during weekdays, while B2C emails might excel on weekends.

Frequency: Decide how often you'll conduct A/B tests. Frequent testing can provide more data, but too much can overwhelm your subscribers and dilute your results.

Campaign Duration: Determine the duration of your tests. Short tests may not capture long-term trends, while extended tests can lead to delayed optimization.

B. Testing Timing and Frequency

The timing and frequency of your A/B tests depend on your campaign objectives. For instance:

Regular Testing: If you're fine-tuning elements like subject lines or email content, regular testing (e.g., weekly or monthly) can help you make iterative improvements.

Event-Driven Testing: Some tests are tied to specific events or promotions, and the timing aligns with these occasions.

Continuous Monitoring: For ongoing optimization, consider monitoring key metrics continuously and making changes as necessary.

Balancing Act: Strike a balance between testing frequently enough to stay relevant and not overwhelming your subscribers with too many test emails.

Testing timing and frequency require a delicate balance to ensure that your results are both meaningful and actionable. The right approach depends on your specific goals and your audience's preferences.

Analyzing A/B Test Results

A. Interpreting Data

Interpreting A/B test data is a crucial step in the optimization process. After your test has run, you'll have data to analyze. Here's how to interpret your A/B test results effectively:

Key Metrics: Start by looking at key metrics such as open rates, click-through rates, conversion rates, and revenue generated. Compare these metrics between the control and test groups.

Statistical Significance: Assess the statistical significance of your results. Statistical significance helps determine whether the differences between your test and control groups are due to the changes you made or if they could be attributed to chance.

Data Patterns: Look for patterns and trends in your data. Are there noticeable differences in performance between the A and B versions? Analyze these differences to understand what's working and what's not.

B. Understanding Statistical Significance

Statistical significance is a crucial concept in A/B testing, as it helps you determine whether your results are reliable or simply due to chance. To understand statistical significance:

P-Value: The p-value is a statistical measure that quantifies the likelihood of obtaining results as extreme as those observed if there were no real effect. A low p-value (usually less than 0.05) indicates statistical significance.

Confidence Intervals: Analyze confidence intervals around key metrics. If they don't overlap, it's a good sign that the results are statistically significant.

Sample Size: The size of your sample affects the confidence in your results. Larger sample sizes increase the likelihood of detecting small but meaningful differences.

C. Identifying Winning Variations

Identifying the winning variation is the ultimate goal of A/B testing. The winning variation is the one that outperforms the control group. To determine the winning variation:

Higher Metrics: Look for the variation that consistently has higher metrics across key performance indicators.

Statistical Significance: Ensure that the winning variation is statistically significant, indicating that the results are not due to random chance.

Consistency: Consider the consistency of results. Winning variations should perform better consistently across different metrics.

D. Learning from Failed Tests

Not all A/B tests yield positive results. Some tests may fail to show significant improvements. It's essential to learn from failed tests:

Hypothesis Review: Reevaluate your initial hypotheses. What assumptions were incorrect, and why did the changes not lead to better results?

Test Iteration: Failed tests provide an opportunity for further experimentation. Consider modifying your approach and retesting to uncover a winning variation.

Implementing Successful Changes

A. Scaling Winning Strategies

When you've identified a winning variation through A/B testing, it's time to scale the successful changes:

Replication: Implement the winning variation in your future email campaigns to consistently improve your performance.

A/B Testing Continues: While you scale the winning strategy, don't stop testing. A/B testing should be an ongoing process to fine-tune your tactics continuously.

B. Optimization for Better Results

A/B testing is just one part of the optimization process. To achieve better results, consider the following:

Iterative Testing: Continue to test and refine your email elements. Regular testing ensures that you adapt to changing customer behaviors.

Segmentation: Use the insights gained from A/B testing to refine your email list segmentation. Deliver more targeted content for increased engagement.

C. Documentation and Knowledge Sharing

Documenting your A/B tests and sharing knowledge within your team is vital for long-term success:

Documentation: Keep records of your A/B test results, including hypotheses, test details, and outcomes. This documentation helps you track progress over time.

Knowledge Sharing: Share insights and best practices with your team. Ensure that the lessons learned from A/B testing are communicated and applied across your organization.

Documenting A/B Test Results:

Test ID | Test Element | Variation A | Variation B | Key Metric | Statistical Significance | Outcomes |

001 | Subject Line | Engaging | Informative | Open Rate | Significant | Variation A Wins |

Conclusion

A. Recap of A/B Testing in Email Marketing

In this comprehensive guide, we've explored the powerful world of A/B testing in email marketing. A/B testing is a strategic tool that empowers marketers to make data-driven decisions, leading to higher engagement, increased conversions, and improved customer understanding. By comparing different versions of your email campaigns and systematically analyzing the results, you can unlock the full potential of your email marketing efforts.

B. Achieving Email Marketing Success

As you conclude your journey through the world of A/B testing in emails, it's essential to consider the key takeaways:

Clear Objectives: Define your objectives before conducting A/B tests to ensure that your efforts are aligned with your overall marketing goals.

Segmentation: Segment your email list to send tailored content to different groups, increasing the relevance of your emails.

Meaningful Variations: Create meaningful variations for A/B tests, focusing on elements that matter most for your objectives.

Statistical Significance: Understand the concept of statistical significance to ensure that your test results are reliable.

Iterative Improvement: A/B testing is an ongoing process. Continuously refine your email marketing strategies based on what you've learned.

Documentation and Sharing: Document your A/B test results and share knowledge within your team for long-term success.

A/B testing is not a one-time effort; it's a dynamic process that evolves with your audience's preferences and behaviors. It equips you with the tools to adapt, optimize, and consistently achieve better results in your email marketing campaigns.

If you have further questions or need additional assistance, please feel free to reach out to us.

[Your Company Name]

[Your Company Email]

[Your Company Address]

- 100% Customizable, free editor

- Access 1 Million+ Templates, photo’s & graphics

- Download or share as a template

- Click and replace photos, graphics, text, backgrounds

- Resize, crop, AI write & more

- Access advanced editor

Optimize your email campaigns with the Email Marketing Comprehensive Guide to A/B Testing in Emails Template from Template.net. This editable and customizable guide empowers you to refine strategies for maximum impact. Tailor it effortlessly to your needs, with the added advantage of being editable in our Ai Editor Tool, ensuring a personalized A/B testing experience.