Free Performance Testing Requirement Checklist

Authored by: | [your name] |

Company name: | [your company name] |

Employee name: | [employee name] |

Performance Assessment Date: | February 17, 2052 |

This checklist is designed to help software developers and system engineers understand and validate performance of their systems. It allows them to stress-test their system and improve its reliability, scalability, and resource usage efficiency, identify bottlenecks, establish benchmarks, and support decision-making processes for performance improvements. Follow this checklist for a thorough and reliable approach in enhancing system performance.

Performance Testing Objectives:

Are performance testing objectives clearly defined?

Are the specific performance metrics identified (e.g., response time, throughput, resource utilization)?

Have performance goals been established for each metric?

Test Environment Setup:

Is the test environment representative of the production environment?

Have all necessary hardware, software, and network configurations been replicated?

Is there sufficient infrastructure to simulate realistic user loads?

Performance Test Scenarios:

Have performance test scenarios been identified based on user behavior patterns?

Do the scenarios cover a range of usage conditions (e.g., peak load, normal load, stress conditions)?

Have boundary cases and edge conditions been included?

Test Data Management:

Is test data realistic and representative of production data?

Have data privacy and security concerns been addressed?

Is there a mechanism to generate and refresh test data?

Performance Test Execution:

Have performance tests been scheduled during off-peak hours?

Is there a strategy to monitor and analyze test results in real-time?

Are there protocols in place to handle unexpected errors or failures during testing?

Performance Test Reporting:

Will performance test reports be generated and distributed?

Do reports include detailed performance metrics, analysis, and recommendations?

Is there a process to review and act upon findings from performance test reports?

Performance Test Maintenance:

Is there a plan to regularly review and update performance test scenarios?

Will performance tests be rerun periodically to detect regressions?

Are there mechanisms in place to address changes in the application or infrastructure?

Stakeholder Communication:

Have performance testing objectives and results been effectively communicated to stakeholders?

Is there a feedback loop for stakeholders to provide input on performance requirements?

- 100% Customizable, free editor

- Access 1 Million+ Templates, photo’s & graphics

- Download or share as a template

- Click and replace photos, graphics, text, backgrounds

- Resize, crop, AI write & more

- Access advanced editor

Discover the ultimate solution for streamlined performance testing with Template.net's Performance Testing Requirement Checklist Template. This editable and customizable tool revolutionizes your testing process, ensuring precision and efficiency. Seamlessly adapt to evolving project needs with the AI Editable Tool, empowering teams to optimize performance assessments effortlessly. Elevate your testing protocols today with this comprehensive checklist template from Template.net.

You may also like

- Cleaning Checklist

- Daily Checklist

- Travel Checklist

- Self Care Checklist

- Risk Assessment Checklist

- Onboarding Checklist

- Quality Checklist

- Compliance Checklist

- Audit Checklist

- Registry Checklist

- HR Checklist

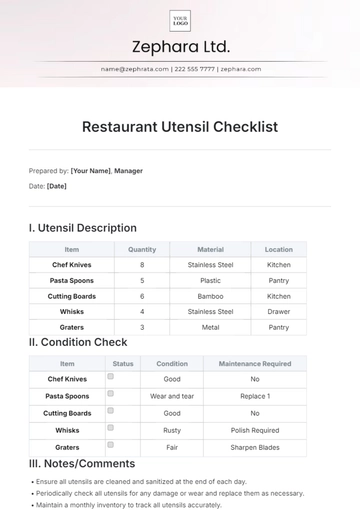

- Restaurant Checklist

- Checklist Layout

- Creative Checklist

- Sales Checklist

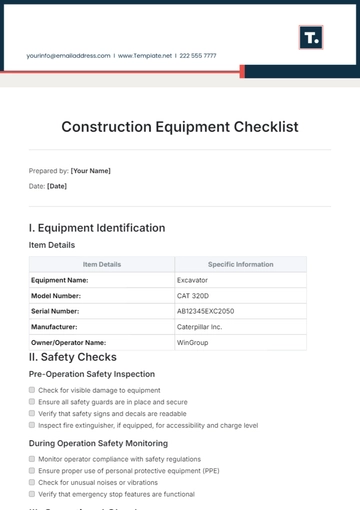

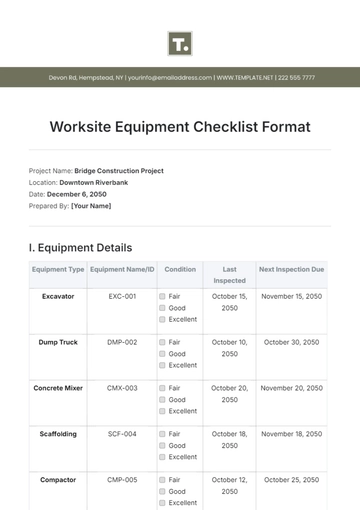

- Construction Checklist

- Task Checklist

- Professional Checklist

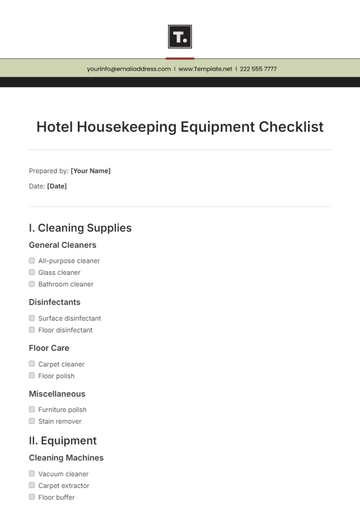

- Hotel Checklist

- Employee Checklist

- Moving Checklist

- Marketing Checklist

- Accounting Checklist

- Camping Checklist

- Packing Checklist

- Real Estate Checklist

- Cleaning Checklist Service

- New Employee Checklist

- Food Checklist

- Home Inspection Checklist

- Advertising Checklist

- Event Checklist

- SEO Checklist

- Assessment Checklist

- Inspection Checklist

- Baby Registry Checklist

- Induction Checklist

- Employee Training Checklist

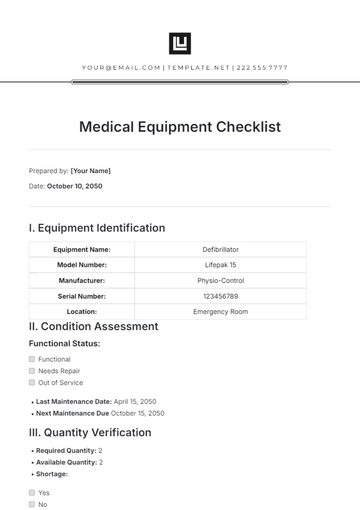

- Medical Checklist

- Safety Checklist

- Site Checklist

- Job Checklist

- Service Checklist

- Nanny Checklist

- Building Checklist

- Work Checklist

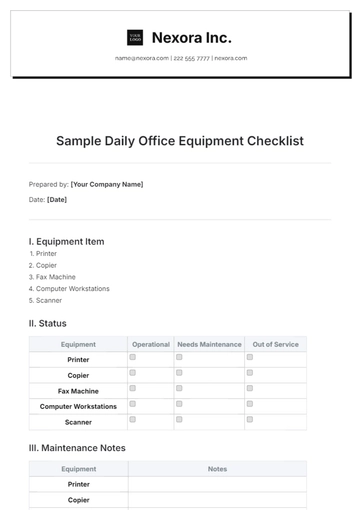

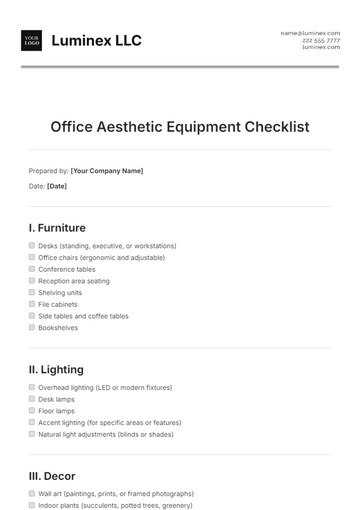

- Office Checklist

- Training Checklist

- Website Checklist

- IT and Software Checklist

- Performance Checklist

- Project Checklist

- Startup Checklist

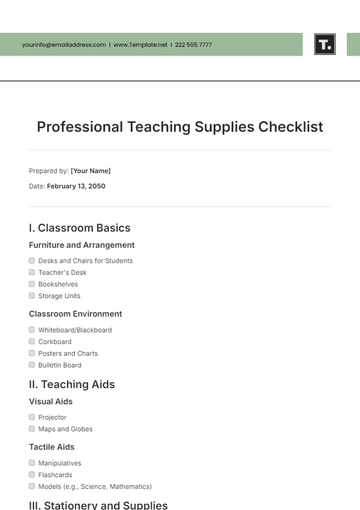

- Education Checklist

- Home Checklist

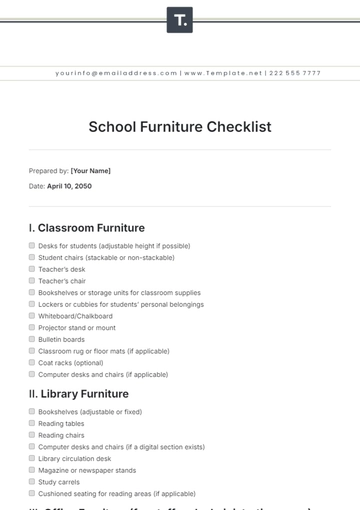

- School Checklist

- Maintenance Checklist

- Planning Checklist

- Manager Checklist

- Wedding Checklist

- Vehicle Checklist

- Travel Agency Checklist

- Vehicle Inspection Checklist

- Interior Design Checklist

- Backpacking Checklist

- Business Checklist

- Legal Checklist

- Nursing Home Checklist

- Weekly Checklist

- Recruitment Checklist

- Salon Checklist

- Baby Checklist

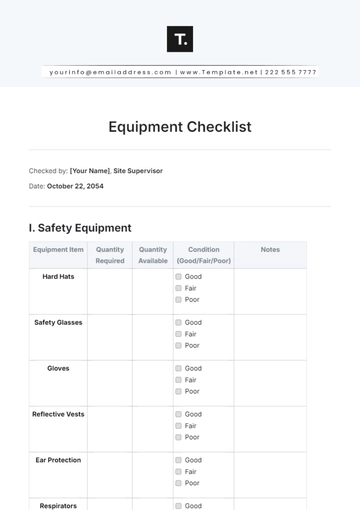

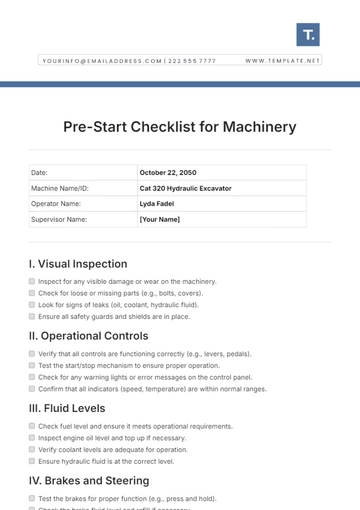

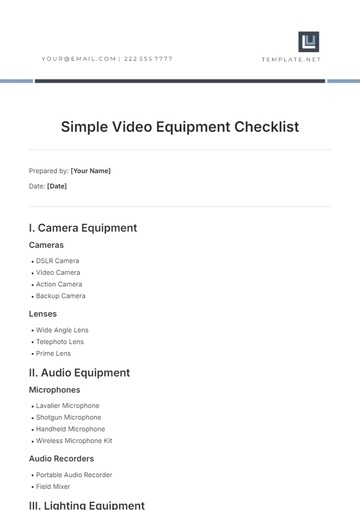

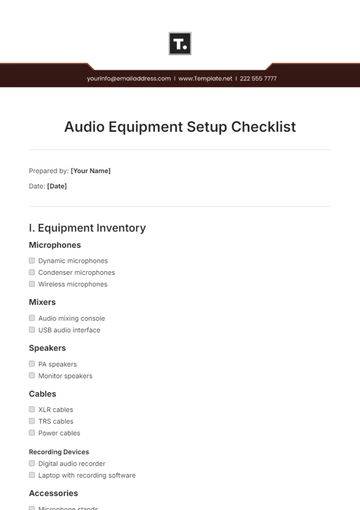

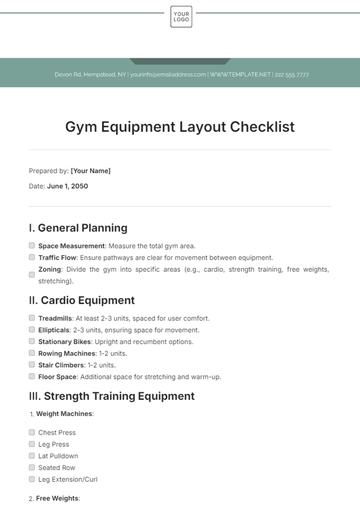

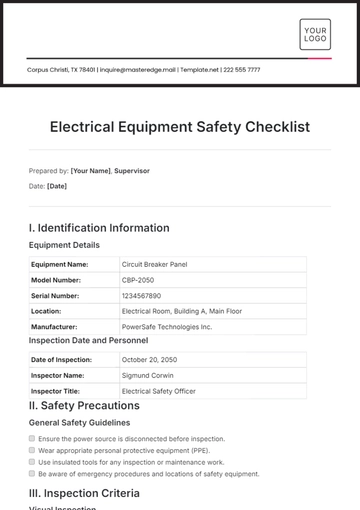

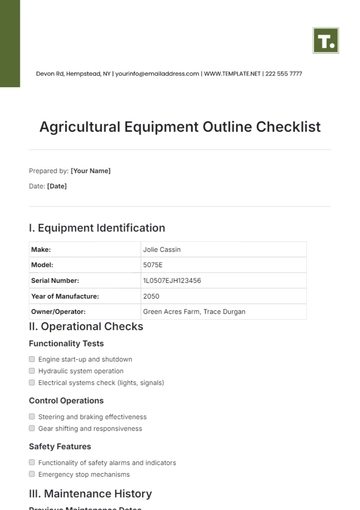

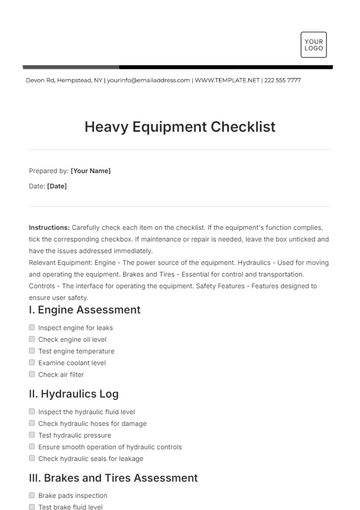

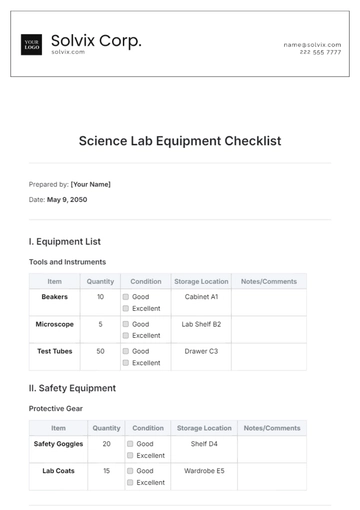

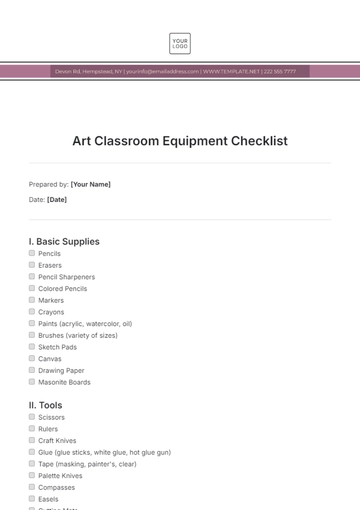

- Equipment Checklist

- Trade Show Checklist

- Party Checklist

- Hospital Bag Checklist

- Evaluation Checklist

- Agency Checklist

- First Apartment Checklist

- Hiring Checklist

- Opening Checklist

- Small Business Checklist

- Rental Checklist

- College Dorm Checklist

- New Puppy Checklist

- University Checklist

- Building Maintenance Checklist

- Work From Home Checklist

- Student Checklist

- Application Checklist