How to Avoid AI Hallucinations: A Strategic Guide

As artificial intelligence continues to grow as a necessary ally in improving workflows, productivity, and collaboration, playing a larger role…

Nov 25, 2025

As artificial intelligence continues to grow as a necessary ally in improving workflows, productivity, and collaboration, playing a larger role in our daily lives, AI hallucinations remain a constant challenge across nearly all models and iterations.

Hallucinations occur when an AI system produces information that is irrelevant, largely out-of-context, fabricated, or misleading—but confidently provides the information as fact.

From conversational assistants generating inaccurate explanations to recommendation engines offering blatantly incorrect suggestions, hallucinations can undermine trust, damage user experience, and even lead to harmful outcomes in high-stakes environments.

Fortunately, hallucinations aren’t an unsolvable problem. While no AI system can be perfect, developers and organizations can significantly reduce the frequency and severity of hallucinated outputs by strengthening data practices, improving testing, and maintaining ongoing oversight.

Here are practical, strategic tips to help keep your AI writing applications dependable, accurate, and aligned with user expectations.

Before you can try fixes, you need to understand what fuels AI design or writing tools to hallucinate and produce weird or completely useless outputs. Hallucinations typically arise from these three core factors:

Recognizing how these factors interact helps teams design better mitigation strategies. A model trained with limited visibility can’t suddenly produce perfect accuracy, but refining the dataset and clarifying inputs can dramatically improve reliability.

Data is the foundation of any AI system—and a poor foundation leads to unstable results. Ensuring high-quality data is one of the most effective ways to minimize hallucinations. Therefore, your engineering and content teams must:

By prioritizing data cleanliness and diversity, developers empower their models to reason more accurately and confidently.

Even the best-trained model requires extensive testing before deployment. Testing helps identify edge cases, gaps, or patterns in hallucinated responses.

Effective testing protocols include:

Treat testing as an ongoing cycle—not a one-time event. The more thoroughly you test, the fewer surprises you encounter after deployment.

Once your AI system is live, monitoring becomes essential. Even well-tested models can drift over time due to new user behavior, changing environments, or evolving expectations.

Continuous monitoring systems can:

Real-time monitoring allows teams to step in before hallucinations create negative experiences or propagate incorrect information. Think of monitoring as your AI’s safety net—always active, always watching.

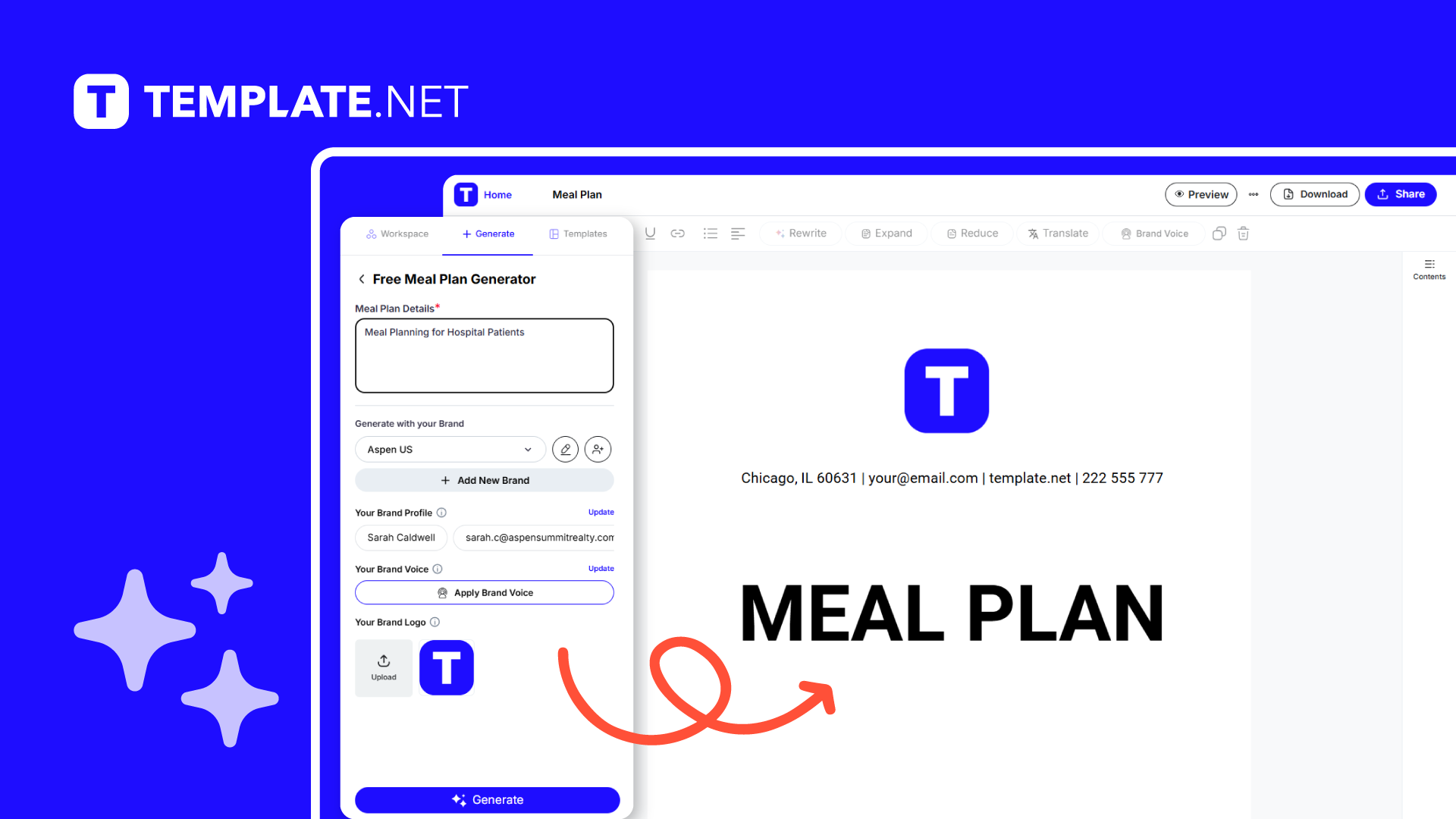

Here’s an example of how an AI system should work for a user, from prompt to editing your template

Users are often the first to detect AI errors, especially in customer-facing products. Encouraging them to report issues helps improve model performance and strengthens trust, whether you’re leveraging your AI products, template library, or both.

A strong feedback loop should:

AI improves fastest when real users can participate in the refinement process. Their perspective reveals blind spots internal teams may overlook.

AI systems that rely on a single data type—only text, only images, or only audio—often have limited understanding of context. Multimodal learning addresses this by integrating different forms of input.

For example:

By blending inputs, AI models build a more holistic understanding of scenarios, which greatly reduces the likelihood of hallucinating missing information.

AI hallucinations remain one of the most persistent challenges in modern artificial intelligence, but they are far from unavoidable. By understanding the causes, strengthening data quality, running robust tests, monitoring live performance, encouraging feedback, and embracing multimodal techniques, developers and teams can dramatically reduce these issues.

Reliable AI doesn’t come from hoping the model behaves—it comes from designing systems with safeguards, oversight, and continuous improvement. Implement these practices in your next AI project and you’ll be better equipped to develop applications that are not only innovative but genuinely trustworthy.

As artificial intelligence continues to grow as a necessary ally in improving workflows, productivity, and collaboration, playing a larger role…

Creating a meal plan can help you stay on track with healthy eating and reach your dietary goals. With a…

Of course it is a no less achievement to land a new job in a new organization. However, if you’re…

Creating a resume on a Mac needs a word processing document. In this case, first of all, you have to…

If you are looking forward to becoming your family’s genealogist, creating a family tree should be one of your significant…

Certificates are essential in every large organization, when you want to congratulate your workers for a job well done, sending…

A family tree (also known as a pedigree chart) is an illustrative diagram with mini photos, word art, and other…

MS Excel is one of the most common and easy-to-use tools in managing small business activities. Excel can be used…

Students in school are often given assignments wherein they are encouraged to write or draw diagrams about their families. The…